best of toc 3e Analysis and Ideas about the future of publishing full pdf pdf

Teks penuh

Gambar

Dokumen terkait

20 Urusan Wajib Otonomi Daerah, Pemerintahan Umum, Adm KeuDa, Perangkat Daerah, Kepegawaian Organisasi : 1.. Landak Ekspos Hasil

Pada tahap awal perencanaan tindakan siklus II, peneliti melaksanakan konsultasi dengan Guru kelas dan Kepala sekolah, untuk menentukan materi apa yang akan diajukan

Tidak Memenuhi syarat sesuai dengan Dokumen Pengadaan Nomor: 118.1/DOK/POKJA.OR/VI/2016 tertanggal 22 Juni 2016, yaitu Tidak Memiliki Sertifikat FIFA dan Lisensi Agreement untuk

diberikan kesempatsn untuk rnengajukan sanggahan sebagaimana yang diafur dalam Perpres Nomor 54 Tahun 2CI10 sebagaimana telah di rubah dalam Perpres 7O Tahun 2S1Z

1) Aspek Kebenaran. Dalam aspek kebenaran yang meliputi 4 indikator menunjukkan bahwa tidak memerlukan revisi karena hasil dari penilaian validator menunjukkan kriteria yang

Data D2 yang tidak masuk pada D3 Serdos Ge lombang 20150 2 ini akan dice k kem bali pada database di PDPT untuk penyusunan data D3 Ser dos selanjutnya.. PT dapat mengusulkan dosen

related discourse. The instructor needs only to confirm that students provide no serious misinformation, which has not occurred to any significant extent in ear- lier

dan Pelaporan Pemerintah Daerah Provinsi Jawa Barat mengenai penyusunan laporan keuangan arus kas dapat di simpulkan sebagai berikut :. Secara prosedur penerimaan dan pengeluaran

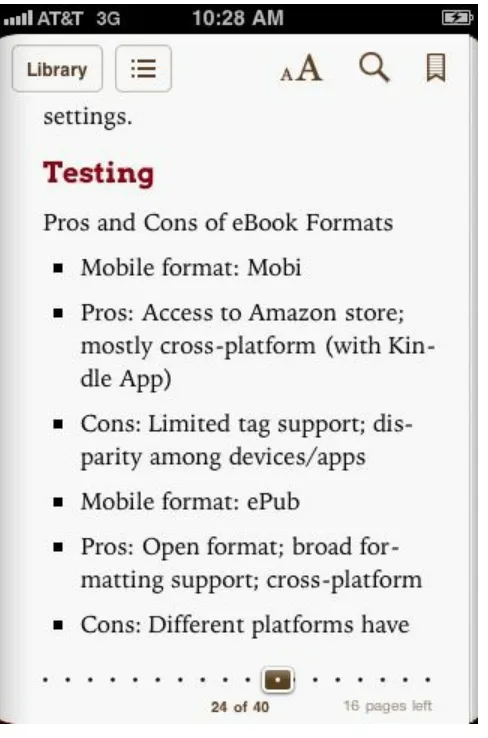

![Figure 13-5. Three books produced by the BookJS in-browser typesetting library [photo by KristinTretheway]](https://thumb-ap.123doks.com/thumbv2/123dok/3940033.1883453/235.612.84.530.73.527/figure-books-produced-bookjs-browser-typesetting-library-kristintretheway.webp)